Single-View Hair Modeling for Portrait Manipulation

- Menglei Chai1

- Lvdi Wang2

- Yanlin Weng1

- Yizhou Yu3

- Baining Guo2

- Kun Zhou1

- 1Zhejiang University

- 2Microsoft Research Asia

- 3The Hong Kong University

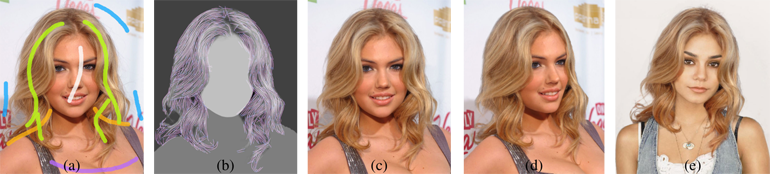

Given a portrait image and a few strokes drawn by the user as input (a), our method generates a strand-based 3D hair model as shown in (b), where a fraction of reconstructed fibers are highlighted. The hair model can be used to convert the input portrait into a pop-up model (c) which can be rendered in a novel view (d). It also enables several interesting applications such as transferring the hairstyle of one subject to another (e). Original images courtesy of Getty Images (a) and Andrew MacPherson (e).

Abstract

Human hair is known to be very difficult to model or reconstruct. In this paper, we focus on applications related to portrait manipulation and take an application-driven approach to hair modeling. To enable an average user to achieve interesting portrait manipulation results, we develop a single-view hair modeling technique with modest user interaction to meet the unique requirements set by portrait manipulation. Our method relies on heuristics to generate a plausible high-resolution strand-based 3D hair model. This is made possible by an effective high-precision 2D strand tracing algorithm, which explicitly models uncertainty and local layering during tracing. The depth of the traced strands is solved through an optimization, which simultaneously considers depth constraints, layering constraints as well as regularization terms. Our single-view hair modeling enables a number of interesting applications that were previously challenging, including transferring the hairstyle of one subject to another in a potentially different pose, rendering the original portrait in a novel view and image-space hair editing.

Keywords

strand tracing, portrait pop-ups, hairstyle replacement